A Dozen Reasons Why Test-First Is Better Than Test-Later

[Author’s note: This is an “omnibus” edition of a series of short newsletters I wrote, about two years ago.]

An editor of Dr. Dobbs magazine once wrote to me—replying to my response to an article—“All the benefits [of Test-Driven Development] could be attained equally by writing tests after the code, rather than before.”[1]

Tests exercise software to be sure it’s doing what was intended. So, whether you use Test-Driven Development (TDD) or write unit-tests after coding, you’re presumably getting the same benefit. The safety-net gets built, either way. Right?[2]

So, all else being equal (resulting quality, maintainability, lines of test code, lines of implementation code, time spent writing code), what advantage is there to writing the test first?

The difference between TDD and test-after unit-testing is subtle, but important. TDD is much more than writing the unit test first.

Chew on each of these separately. They frequently overlap, and I’ve even resorted to a bit of intentional repetition. These are all just words, alas: You won’t really feel it in your bones until you do TDD for a while. Think of each of the following sections as a finger pointing at the Moon: You won’t actually see the Moon until you give up on looking at the finger.

Bruce Lee points a finger towards the Moon

1. You cannot write untestable code.

If you do TDD well—playing the game that you cannot add any code that hasn’t been earned through a failing test—you literally cannot write untestable code.

This may seem obvious, at first, but what may not be so obvious is how easy it is to make unit-testing a bit of code harder by not writing the test first. If writing tests after were that simple, people would do it. And, they don’t, mostly.

Are you passing a non-virtual (sealed, or final) third-party dependency? How will you test all the permutations of interactions and responses with that object?

Did you create a method without a reasonable return value? (Note: Not all methods need a return value. Sometimes the most reasonable return value is none at all, aka void.)

Or (worse!) did you return an error code that the caller has to look up, or some object that the calling code now has to test against a null pointer?

Did you have a path that will throw an exception that the calling code has to deal with? Or (worse!) a Java “checked” exception that the client either has to wrap, declare as thrown, re-throw, or ignore?

Did you create a “helper” or “utility” method and, since it has no state, did you make it static? Yes, these are very easy to test. But their callers (typically someone on your team will be writing the calling code) are no longer easy to test.

Most (not all) developers tend to write perfectly reasonable procedural code when they don’t write the test first. The problem with perfectly reasonable procedural code is that it tends to be “scripty,” walking through a scenario and using branching to differentiate scenarios. Good procedural code makes for smelly object code, and bloated functional code.

TDD actually encourages us to write script code, but in the tests themselves. Each test is a representation of a possible calling sequence for client code. And it frees us up to design the implementation however we want, using the features of our programming language to its full potential: Objects, stateless functions, lambdas, abstractions, mix-ins, contracts, you-name-it.

2. You are recording your thoughts.

Objects and classes never live independent of each other. Instead, they combine and interact to create all the required behaviors. A “unit test” is a test of one tiny “unit” of that larger “business model” behavior.

The thought-process for TDD is not “Oh, let me write a test for my code…well how can I do that if I haven’t written the code?” Here’s a more common internal dialog: “I need this particular object to provide this next new bit of behavior. When I give the object this particular state, then ask it to act on that state, here are results I expect.”

Note that this internal dialog naturally occurs from a viewpoint external to the object. You’re recording a mini-specification for that new behavior in a simple, developer-readable, re-runnable automated test.

3. We design the interface from the viewpoint of calling code.

With TDD, often the required object class, or its methods, don’t even exist yet; so we’re effectively designing the naming and the interface (public method signatures) to that object through the tests. The unit tests are the first “clients” of that behavior. In this way, our interfaces (i.e., what objects are designed to do for other objects) are designed from the correct perspective: Each from the caller’s perspective.

4. You have to know the answer.

Perfectly rational, professional developers, when faced with making a decision with insufficient information, will often choose something that “feels right,” and plan to ask for clarification later—presumably during their copious free time, at a very relaxed meeting full of open dialog, during some testing and “hardening” phase that never seems to happen.

Computer programming does not do well with vagaries. Computers will do what you tell ‘em to do; nothing more, nothing less, and nothing different.

So if you don’t know for which day to journal a transaction that happens exactly at midnight, you’d better go find out! Ask the product advocate, business analyst, tester, or your pair-programming partner. If no one seems to know, then we need to ask an actual customer.

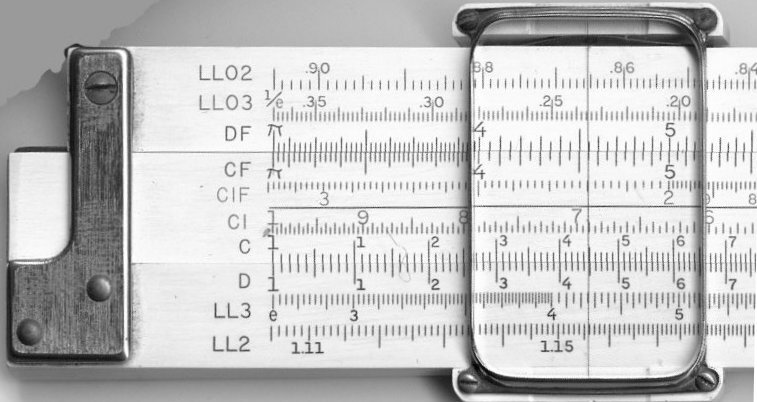

So we can’t write the test unless we know the answer we’d expect. You have to get out the calculator, slide-rule, or Google; or preferably the product advocate has told you what to expect (preferably through a Cucumber scenario).

Remember slide rules?

What do we tend to do if we simply write out the whole 10240-bit quantum-encryption algorithm first, them write a unit test for it? We may assume the answer (which would look like random static anyway) our own code gives us is correct! And that, folks, is a huge disaster waiting to happen.

We’re more likely to look for the right answer before ever writing the solution to that request, than we are to ask questions after we’ve already written the code.

5. Discovering Scenarios

Have you ever looked at a block of a mere dozen lines of old code (that you now have to enhance), and struggled to figure out all the permutations of what it does, and what could go wrong? If your team is writing unit tests afterwards, what if they missed an important scenario for that object? You could then mistakenly introduce a defect that would slip past the test suite.

When a team reassures me that they’re adding tests after the fact, we often discover they have perhaps 1/3 of the behaviors covered, or the tests don’t sufficiently check all side-effects that are clearly performed in the code. That leaves a lot of untested behavior that could break later, without anyone knowing!

With TDD, we don’t add functionality without a failing test, so we know we are much less likely to miss something. We’re actually “thinking in tests.” TDD requires us to keep a short list of our as-yet-untested scenarios, and we frequently think of more (or better) new scenarios while writing the tests and implementation for the more obvious scenarios.

It’s just so much easier to see those alternative paths and permutations that need testing as you are writing the code the first time, because that’s what your mind is on at the time. With TDD, you’re dealing with just one at a time, so the code increases in complexity incrementally, while the growing test-suite protects the work you’ve already finished. And, there’s always that short To-Do List at your side (or in your tests) for you to record the brainstorm of ideas that flood your mind.

6. It’s easier to write the test first.

If you try to write the tests afterwards, what happens if a test fails? Is the test wrong? Is the code wrong? Sure, it’s easy to figure out which is true…right?

Confirmation bias may play a role here. “Ah! Maybe that’s what’s supposed to happen. Yeah…that makes sense. Kinda…” So you “fix” the test to match the code, or — “No! Please stop! Nooooo…” >poof!<—you delete the failing test.

We’re human. We avoid pain. I suspect that’s why a lot of unit tests don’t get written after the fact, at all. We avoid those areas where we’re not sure we got it right, where the requirements were a bit vague, where we made some assumptions, or where we think it’s just not that important. You know, that “less important” code we keep hearing about (but we’ve never encountered).

7. You can’t place your bet after the wheel has stopped.

Writing the implementation first, and then testing the behavior that you wrote, is rather like trying to convince yourself that you really had wanted to bet on red, not black.

I recall just such a confirmation-bias disaster. In the mid-90s, our enterprise-backup software’s UNIX ports had a chunk of code based on “tar,” the UNIX tape-archival command. On restore, tar would mask out the super-user execution bit on any restored file. I recall seeing this code (I hadn’t written it…our architect had copied and pasted it directly from tar), and assumed that a smart, security-minded thing to do in tar was likely a smart thing for our product, too.

Except that our product was expected to restore a whole system to a bootable state.

No one noticed, for years. Then one day a client had to restore a root partition on a massive server, and the dang thing wouldn’t boot correctly. Oops!

Turns out the architect, executive developer, and the UNIX developer (moi), had all unknowingly conspired to ruin a customer’s day (more like “further ruin”).

All the test scripts we had written around restore functionality assumed that the tar code was right. None of those scripts checked the restored files’ SETUID bits.

How human of us, to assert only that we couldn’t have possibly made a mistake. The scientific equivalent of this would be to carefully craft a flawed experiment to bolster a pet theory.

We had damaged our reputation, and risked the reputation of the product. Thankfully, UNIX ports took about 20 minutes, assuming we had access to the appropriate machine. And we were able to FedEx a magnetic tape overnight…

8. Good behavioral coverage, guaranteed.

With TDD, we want to see the test fail. That’s how we know it’s testing something new. And to make it fail, we have to add at least one assertion or expectation. This gives us what I call “behavioral coverage”: We’re not concerned with covering (or exercising) code. We’re covering real system behaviors.

Seems obvious, right? Apparently, not always. I’ve come across entire test suites that reached 80% code coverage, and tested…absolutely nothing.

How does this happen?

One team had been encouraged by their leadership to increase their code-coverage numbers: “Thou shalt increase code coverage by 10% per month!” They were successful in meeting this “motivational metric.”[3] The managers had called me in after the team had reached about 80%, because their defect rate had not decreased, at all.

I sat down with the team and they showed me their tests. None of the tests I saw had assertions/expectations. No behavioral coverage!

When I recovered from a dumbfounded state, I asked, as evenly as I could, if they could tell me what the tests I saw on the screen were meant to test. They quite sincerely answered, “Well, if it doesn’t throw an exception, it works!”

Not a good standard of quality for an institutional investment firm.[4]

When you write the test first, you have to ask the critical question, “What behavior am I testing here?”

9. You cannot mistakenly leave behind untested/undocumented code.

TDD doesn’t just change the order of tasks: it makes writing the unit test an integral part of writing the code.

The behaviors of your system are recorded in the tests. The way the system accomplishes those behaviors is in the implementation. Those are closely intertwined, of course, but they are different perspectives on the same behaviors. Tests tend to be easier for us mere mortals to follow, because they are simple step-by-step scripts, whereas any bit of implementation may have numerous paths, assumptions, responsibilities, and—perhaps most importantly—things that it’s not supposed to be doing.

Very early on in my career (circa 1990), I was tasked with looking for inefficiencies in our implementation of a pre-TCP/IP high-speed networking protocol. I found a rather complex-looking variable-length message buffer-padding algorithm… [waves hands wildly…it was, after all, 30 years ago].

It wasn’t slow, but it seemed a bit convoluted, and certainly difficult to decipher. I recall determining that I could reduce the if-then-else menagerie into one simple equation. “I’m so clever!”

But it turns out (because…no unit tests to serve as engineering spec, or safety-net!) that my calculation failed to consider that a “zero-byte” message still needed at least one empty message-body “block” sent. Now that may have been wasteful from a data transfer perspective, but one has to consider that (a) “empty” messages (mostly control messages) were rare compared to the massive amounts of data that were being transferred, and (b) it was actually part of the protocol. My calculation would have sent over no blocks, and the other end of the protocol would have—very rapidly, on a liquid-cooled Cray supercomputer—lost its mind.[5]

The original code could have had a comment that said, “This code cannot be reduced further because of the zero-length-message case.” But it didn’t.

Or there could have been a single very simple unit test for the zero case, which would have failed when I made my “amazingly insightful” change. But there wasn’t.

Not to leave you hanging with deep concern over the fate of 1990s-era national security: I was so amazed that my colleagues had missed this elegant solution that I ran into the boss’s office and told him about it. He turned to me and (very calmly) said, “What about the zero-length message case…?”[6]

10. TDD is more rewarding.

It’s the game-ification of what would otherwise be an unpleasant chore. Let’s face it, writing unit tests (the chore) after the code (the fun part) is akin to eating cold vegetables after your dessert. With TDD, unit-testing is actually part of the fun of writing code.

With each failing test-scenario written, we “earn” the right to add a little bit more behavior to your system. We then take a swing at writing the implementation that will pass the test, and we get instant rewards in each passing unit-test.

Don’t ever forget: Humans write software. You are human. We like games. We respond well to fast, pleasant feedback. TDD is closer to a game than test-after. Test-after is a chore. Sure, professional developers need to be unit-testing their code, so they shouldn’t shirk doing their chores. But we’re still human. If we can make the chore a fun game, where’s the harm?

11. It’s very difficult to write smelly code.

Beck’s Four Rules of Simple Design may read like rules, but they’re more like obvious natural outcomes if you’re doing TDD. Believe me, I’ve tried to write stinky code test-driven, in order to create a sufficiently challenging refactoring lab. In the TestedTrek lab, the tests were written afterwards. Why is this? Small, discrete, independent tests tend to lead you naturally to write code that separates concerns without duplication, using the features of your programming language. I tried to write TestedTrek test-first, but in order to stink up the code I’d have to go back and alter the degree of isolation in the tests. I gave up and instead created MessTrek (an untested ball of mud) first, then added legacy characterization tests onto MessTrek to create TestedTrek.

12. TDD is not a testing technique.

Test-first turns unit-testing into a thinking tool, and a development process; not just a testing technique. Each test isn’t really a test until it passes for the first time. Before that, it’s an individual engineering specification: a unique little scenario describing a behavior that you want from your code. Software developers naturally “think in tests” or perhaps more accurately we think in “discrete scenarios.” Now we’re encouraged to actually write out each of those scenarios, justifying every addition to the code. Once you’re used to it, you won’t want to write code without a failing test scenario.

Conclusion

So while it’s true that—if your code is well-designed, and that’s a big “if”!—you can write the tests afterwards and get the same benefits from having the unit tests. But you’ll be missing the benefits of that early ease, safety, cleanliness, confidence, and fun.

Footnotes

[1] http://www.drdobbs.com/architecture-and-design/addressing-the-corruption-of-agile/240166890

[2] Lauri Williams did a study comparing test-first with test-after. The test-after team was finished before the test-first team. Alas, their code had more defects, because they didn’t sufficiently unit-test the code. In other words, they cheated. Not because they were cheaters, but because unit-testing code after the fact is harder…and boring. And you’re far more likely to miss an important case. https://collaboration.csc.ncsu.edu/laurie/Papers/TDDpaperv8.pdf

[3] The name for a metric that de-motivates people. To understand this counter-intuitive effect, I recommend Robert Austin’s book, Measuring and Managing Performance in Organizations.

[4] Yeah, just be thankful they weren’t working on self-driving cars!

[5] This was the 1990s, when Crays were the fastest system around, and HYPERchannel was the only way you could get a Cray to talk to your mainframe without falling asleep from sheer boredom. 50 Megabits/second!

[6] If only we had been pair-programming! Oh, the time we would have saved that day. [Rest in peace, Larry Allen. We miss you.]